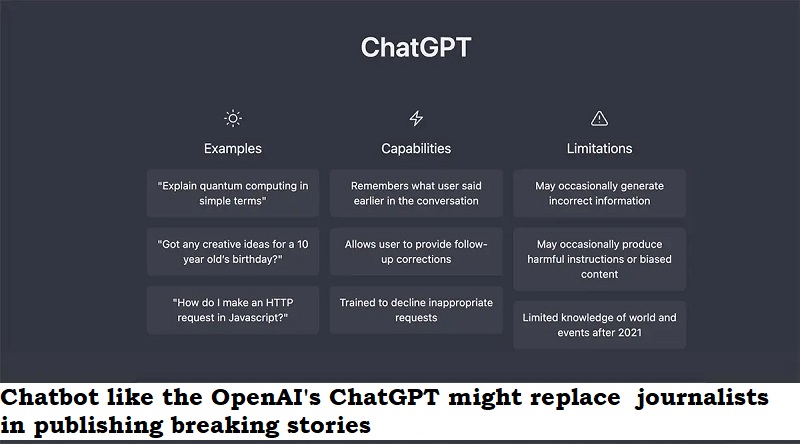

The use of artificial intelligence (AI) language models, such as ChatGPT, is becoming increasingly prevalent in the news industry. These models are capable of generating human-like text, which can be used to produce news stories quickly and efficiently.

While AI language models can be useful in some contexts, such as generating weather reports or sports scores, there are concerns about their ability to replace human journalists in breaking news stories.

One of the main concerns is the potential for bias in the data used to train the AI models. If the training data is biased, it could result in biased news stories, which could have serious implications for public trust in the media.

Another concern is the lack of human judgment and ethical considerations in AI-generated news stories. Human journalists are trained to verify sources, fact-check information, and adhere to ethical guidelines. AI language models, on the other hand, do not have the same level of judgment or understanding of ethical considerations.

However, some argue that AI language models could be useful in supporting human journalists, rather than replacing them. For example, AI models could be used to quickly generate summaries of breaking news stories, which human journalists could then expand upon and verify.

Post Your Comments