Humans’ ability to think critically has been a key factor in their evolutionary progress. Psychologically, critical thinking is related to ‘theory of mind,’ which refers to the ability to perceive differences in mental states between individuals. For instance, if a colleague appears deeply engrossed in a task, you would likely refrain from gossiping with them. This recognition of differing mental states is an example of theory of mind. Scientifically, the ability to think critically is what ultimately defines the advancement of a species.

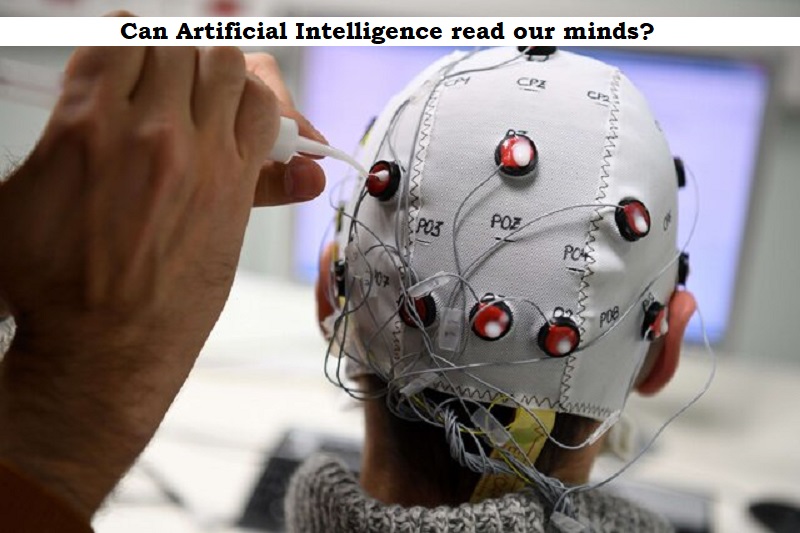

However, with the advent of Artificial Intelligence (AI) chatbots like ChatGPT, which are trained on vast amounts of internet data and have become a standard tool in workplaces and educational settings, concerns have arisen about whether AI can read our minds.

According to Michal Kosinski, a psychologist at the Stanford Graduate School of Business, the March 2023 version of GPT-4, which has not yet been released by OpenAI, could solve 95% of ‘Theory of Mind’ tasks. These abilities were previously considered uniquely human. Kosinski suggests that this ‘Theory of Mind’-like ability may have emerged spontaneously as a byproduct of language models’ improving language skills.

However, Tomer Ullman, a psychologist at Harvard University, has demonstrated that small adjustments in the AI prompts can drastically alter the answers. Maarten Sap, a computer scientist at Carnegie Mellon University, fed over 1,000 theory of mind tests into large language models and found that even the most advanced transformers, such as ChatGPT and GPT-4, passed only about 70% of the time. Sap believes that even passing 95% of the time would not be evidence of real theory of mind.

AI currently struggles with abstract reasoning and often makes ‘spurious correlations,’ as reported by the New York Times. As such, the debate continues on whether the natural language processing abilities of AI can match those of humans. A 2022 survey of Natural Language Processing scientists found that 51% believed that large language models could eventually ‘understand natural language in some nontrivial sense,’ while 49% believed that they could not.

Post Your Comments